Quantum computers won’t replace classical ones anytime soon—but the two might just collaborate. At least, that’s the vision behind ABCI-Q, Japan’s newly launched hybrid supercomputing system, developed by the National Institute of Advanced Industrial Science and Technology (AIST) in partnership with NVIDIA.

On the surface, it appears to be a standard GPU powerhouse, comprising 2,020 NVIDIA H100S interconnected with Quantum-2 InfiniBand, but underneath lies something far more experimental: a functional ecosystem where classical AI and quantum processors share workloads (NVIDIA, 2025). It’s a production-scale system, hosted at the newly established Global Research and Development Center for Business by Quantum-AI Technology (G-QuAT), designed to test the limits of hybrid quantum-classical computing across real-world applications.

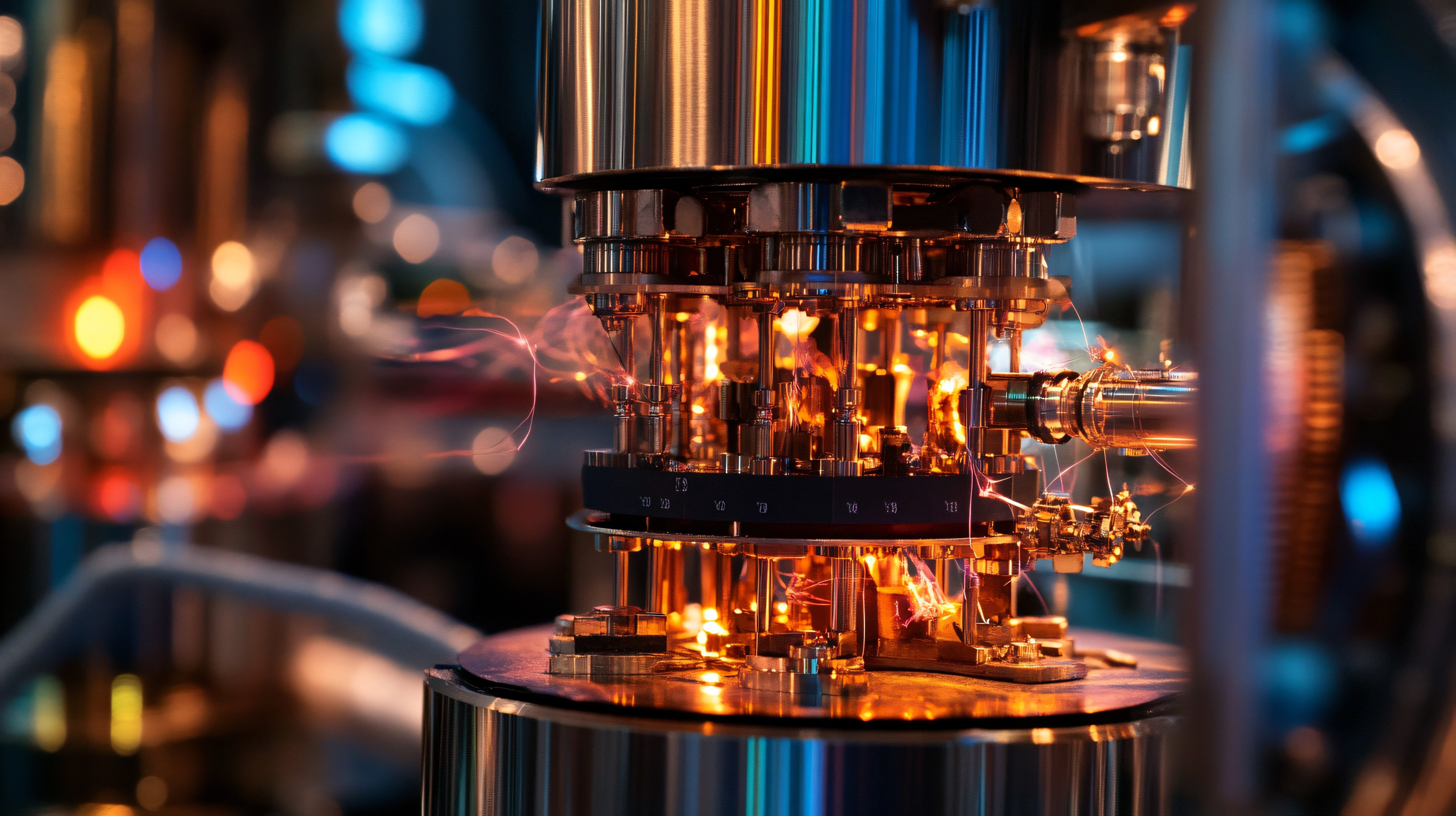

At first blush, ABCI-Q resembles a state-of-the-art AI compute cluster, featuring 2,020 NVIDIA H100 GPUs interconnected through Quantum-2 InfiniBand, optimised for massive throughput and parallelism. But beneath this classical layer, a more experimental architecture emerges: a modular fusion of quantum hardware sourced from Fujitsu (superconducting qubits), QuEra (neutral atoms), and OptQC (photonic systems). These diverse modalities operate within a unified system that allows researchers to test, iterate, and compare quantum technologies at scale.

It’s a rare configuration that allows researchers to run comparative benchmarks, test quantum error correction, and prototype hybrid algorithms in real-time.

Right now, CUDA-Q, NVIDIA’s open-source platform, enables developers to write quantum-classical applications that can scale. Think of it as a programming framework that treats a quantum computer like another GPU in the data centre—abstracting the weirdness behind usable APIs.

For hybrid systems to function effectively, the interaction between classical and quantum components must be fast, flexible, and iterative. CUDA-Q makes that possible, enabling developers to run variational algorithms or simulate quantum workloads at scale—all while seamlessly switching between simulation and real hardware.

Quantum computing has often been framed in binary terms: classical vs. quantum, today vs. tomorrow. ABCI-Q demonstrates a third way that leverages collaboration over competition..

Already, there are plenty of use cases to pique interest:

- Drug discovery: Simulate molecular binding using hybrid quantum chemistry models.

- Finance: Optimize portfolios or run Monte Carlo simulations faster and more precisely.

- AI: Accelerate training by embedding quantum kernels into classical machine learning workflows.

- Supply chains: Solve combinatorial problems like routing and scheduling with quantum-enhanced solvers.

Another factor that might lure organizations to ABCI-Q: it doesn’t force a trade-off between classical and quantum. Instead it creates a platform where the two evolve together with the scale, flexibility, and architectural diversity required to shape the next generation of quantum applications—from drug discovery and finance to logistics and machine learning.

So far, no single hardware approach or killer app has emerged as the winner. But systems like ABCI-Q are building the experimental infrastructure needed to get there. NVIDIA, the darling of Silicon Valley and Wall Street, is positioning itself as the glue between worlds, just as it did for AI. The company is betting the first scalable quantum applications won’t come from a breakthrough qubit, but rather from platforms that can bridge the gap between today’s compute and tomorrow’s logic. Computing in the post-Moore's Law era might not come down to quantum vs. classical but rather quantum witht classical, at scale.

.png?width=1816&height=566&name=brandmark-design%20(83).png)