When OpenAI introduced ChatGPT Atlas in October , it promised to reinvent the web browser by merging the familiar navigation of a browser with the power of its ChatGPT model (OpenAI, 2025).

“With Atlas, ChatGPT can come with you anywhere across the web—helping you in the window right where you are, understanding what you’re trying to do, and completing tasks for you, all without copying and pasting or leaving the page,” the company said. “Your ChatGPT memory is built in, so conversations can draw on past chats and details to help you get new things done.” Atlas is designed to make web tasks smoother: rather than switching tabs or copy-pasting data into ChatGPT, the AI is embedded in your browsing workflow. Atlas brings features like “browser memories” (context that spans your sites and tasks), an “Agent Mode” (where the AI can navigate, fill forms, research) and deep integration of web and conversation. It signals a shift: browsers are becoming not just tools, but autonomous assistants.

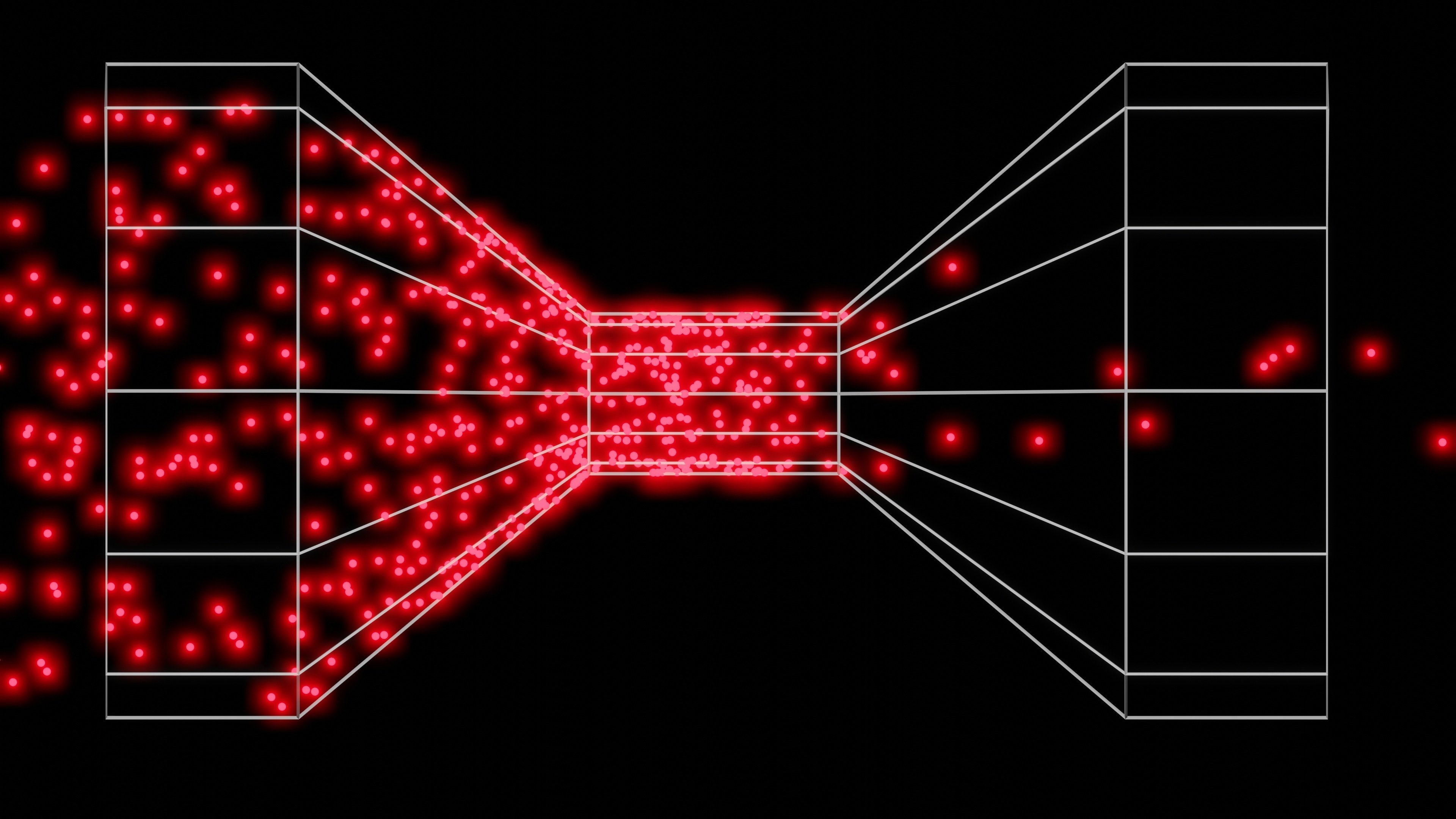

From a productivity standpoint, this is compelling: the browser becomes not just a portal, but a partner. But the very capabilities that make Atlas powerful also create new attack surfaces. Prompt injection in this context means hidden instructions (in webpages, URLs, clipboard content) that the agent treats as legitimate user commands.

But as innovation surfaces, so do sharp warnings. Cybersecurity researchers quickly raised the alarm that this new breed of agentic browsers exposes new vulnerabilities, especially via prompt injection attacks, where malicious instructions are hidden in content that the AI interprets as “user intent.” Security-focused browser company Brave pointed out that this isn’t a ChatGPT-specific issue but a systemic risk for all AI-enabled browsers, including Perplexity’s Comet. “AI browsers that can take actions for users are powerful but fundamentally risky,” their researchers warned. Brave recommends limiting AI agents' access and keeping sensitive activities, like banking, on traditional browsers (Brave, 2025).

A report by the research firm NeuralTrust found the Atlas omnibox (combined address/search bar) can be tricked by “fake URLs” containing embedded instructions. The browser may interpret them as valid prompts and execute unwanted actions (The Hacker News, 2025). At a technical level, the issue hinges on the blurred line between browsing content and browsing commands. Traditional browsers guard against unintended actions via clear separation: user triggers click, form submits, HTTP requests. AI-enabled browsers like Atlas incorporate an agent that interprets natural language and acts, introducing a new class of input: “trusted user intent”.

The most serious concern is prompt injection, a vulnerability where malicious instructions are hidden within websites or other content (Gizmodo, 2025). These can trick the AI into performing unauthorized actions, such as copying sensitive data or opening phishing sites. Security researcher Simon Willison and others have raised red flags, noting that Atlas appears to rely too heavily on users manually monitoring the AI’s behavior, which is impractical and unreliable.

.png?width=1816&height=566&name=brandmark-design%20(83).png)